Algorithmic Decision-Making in Pakistan: A Challenge to Right to Equality & Non-Discrimination

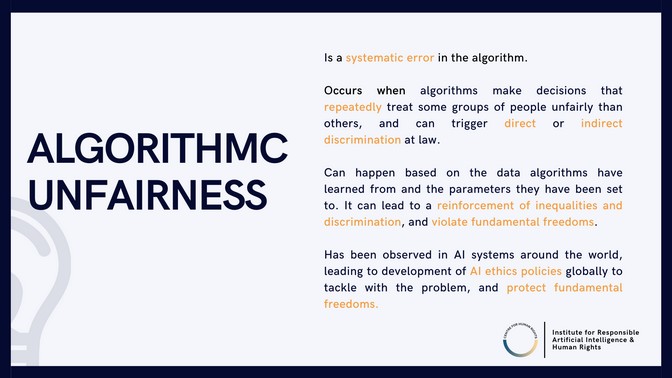

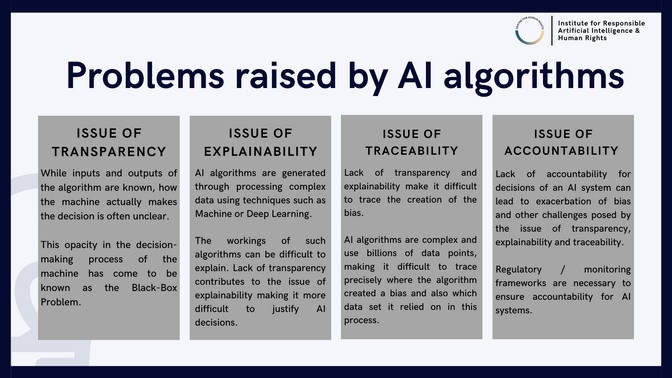

Artificial Intelligence carries numerous opportunities, but globally the alarm of its risks has buzzed quite loudly. Despite this, the discourse in Pakistan continues to be focused on the opportunities of AI and the risks remain largely unaddressed, especially the risks posed to human rights. This report is a conversation starter in Pakistan about the risks of AI, in particular that of algorithmic unfairness in automated decision-making in relation to the constitutional freedoms of inequality and non-discrimination in Pakistan.

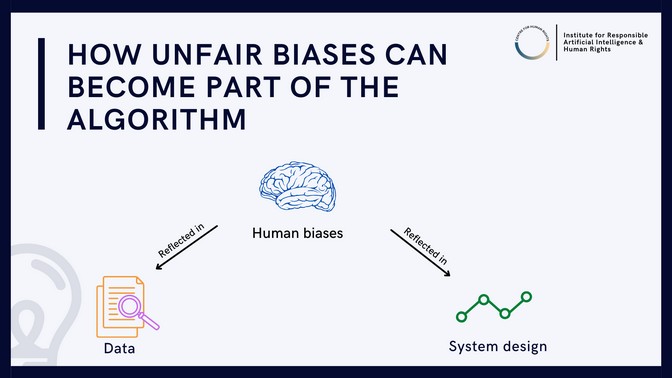

Given the technicalities of this area, the report has benefited from expert insights provided by AI researchers, AI System developers, experts working on de-biasing AI Systems, human rights experts, and legal scholars of AI. The report helps breakdown the technical workings of AI algorithms and how the pre-existing inequalities in the society can be embedded into an algorithm which can then be reinforced through decision making by AI Systems. The report also identifies the gap which exists between the law and IT industries which needs to be bridged in order to develop a more sustainable future of AI through strategic intervention of law and policy. As AI involves and impacts a range of stakeholders, the report offers targeted recommendations for the way forward in Pakistan.

Project Lead:

Uzma Nazir Chaudhry

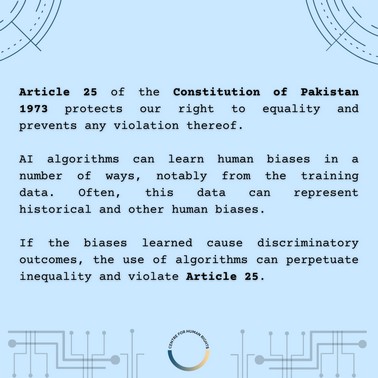

Algorithmic Justice

Algorithmic Unfairness